User Experience. A New Dimension of Abstract

You can find the same article on Medium by clicking here.

TL; DR (What is this article about)

I spent half a year and 500 hours of my life to create a free tool that shows 896 viewing angles of the most common questions in Product Management from the perspective of cognitive biases (scientifically proven patterns of human thinking).

I spent half a year and 500 hours of my life to create a free tool that shows 896 viewing angles of the most common questions in Product Management from the perspective of cognitive biases (scientifically proven patterns of human thinking).

63 questions and 896 answers that can be easily extrapolated to hundreds of other questions. Minimum opinion. Maximum science.

The project goal, in a nutshell, is to create a tool for managers that will serve them in the way that software design patterns serve software engineers. Thus, make their thinking process run more efficiently by keeping in mind a few generic, default truths.

Project URL: https://keepsimple.io/uxcg

Table of contents (6 minutes)

- Intro and UX Core statistics

- Too Abstract

- Leveling the Odds

- User Experience Core Guide (UXCG)

- Project Future

1. Intro and UX Core statistics

Hello. My name is Wolf. Last year, I completed my 2.5 years of research on cognitive biases and launched the UX Core project by the end of July. There, I wrote a simplified description of 105 cognitive biases, giving an example of using each of them in the IT domain (product management). The project's main goal was to show people how many mistakes are in our thought processes and how little we know about ourselves. In addition, I wanted to show all the underestimated potential of this knowledge, which we ignore in our everyday life.

Hello. My name is Wolf. Last year, I completed my 2.5 years of research on cognitive biases and launched the UX Core project by the end of July. There, I wrote a simplified description of 105 cognitive biases, giving an example of using each of them in the IT domain (product management). The project's main goal was to show people how many mistakes are in our thought processes and how little we know about ourselves. In addition, I wanted to show all the underestimated potential of this knowledge, which we ignore in our everyday life.

I had no idea if anyone would need this tool. I had no plans to advertise it in any way, and the whole "promotion" of the project was limited to one article in two languages (for Russian and English audiences), as well as several posts on Reddit.

From the beginning of the project, I thought that UX Core could only be useful to a narrow circle of specialists despite the importance of the topic. However, the feedback I received in the first five months exceeded all my expectations.

More than ten thousand people visited the project in the first weeks. Most of the users were from the US (36.6%) and other English-speaking countries. By the fourth month, the top visitors were from Russia (39%), the USA (17.8%), and Ukraine (12.1%). I received messages from employees of Silicon Valley companies (Google, Amazon, GitHub, etc.), owners of startups, directors of venture funds, students from different universities, etc.

In addition, I was honored to present UX Core on the popular ProductMomentum podcast, where before me, the founding fathers of Product Management (Marty Cagan and colleagues from the Silicon Valley Product Group) spoke.

In addition, I was honored to present UX Core on the popular ProductMomentum podcast, where before me, the founding fathers of Product Management (Marty Cagan and colleagues from the Silicon Valley Product Group) spoke.

By May 2021, tens of thousands of people have visited the project. At the moment, the top 3 countries of visitors are Russia (35.13%), the USA (20.47%), and Ukraine (11.94%). The following biases received the highest average rating for “usefulness”: #3 Illusory truth effect, #6 Cue-dependent forgetting, #24 Weber-Fechner Law, #84 IKEA effect, #101 Peak-end rule.

All the words of support and interest I received from the community motivated me to take the UX Core one step further.

2. Too Abstract

“It shouldn't be something that only tech industry knows. It should be something that everybody knows.” ©Tristan Harris – ex. design ethicist at Google

“It shouldn't be something that only tech industry knows. It should be something that everybody knows.” ©Tristan Harris – ex. design ethicist at Google

Tristan has become widely known for opposing well-established practices of using cognitive biases for gross manipulation and profit by megacorporations (Google, Facebook, Amazon, Apple, etc.). The apogee of his advocacy for user rights was the 2020 film "The Social Dilemma," where he, together with former senior employees of the above companies, lifted the curtain on the extent to which these companies manipulate users of their products.

Of course, Tristan's goals and the goals of any participants of such initiatives are noble. However, the problem I see in their articles, films, TED talks, and so on is that it's all too abstract.

Do people understand that IT giants are manipulating them? Yes.

Do they think that these manipulations do not apply to them? For the most part, yes.

Can megacorporations be urged to stop using scientifically proven methods of nudging people? Not. Will people be constantly manipulated? Certainly.

Do people understand that IT giants are manipulating them? Yes.

Do they think that these manipulations do not apply to them? For the most part, yes.

Can megacorporations be urged to stop using scientifically proven methods of nudging people? Not. Will people be constantly manipulated? Certainly.

If, in theory, someone comes up with a mechanism that can limit the work of corporations with the subconscious, we can use the same mechanism with the same legitimacy to limit political campaigns. Sounds great? Maybe. But it will never be approved by any government. Working with peoples' subconscious is the bread and butter of successful politicians, careerists, businessmen, and, of course, megacorporations.

What should those who care for the rights of users do?

Instead of going against science and trying to create abstract mechanisms that regulate the work with the subconscious of users, I propose equalizing all players' chances. We all agree that knowledge is power, but when big players use this power, we tend to throw a rock at them without even trying to master this knowledge ourselves. Meanwhile, this power, this knowledge is public, and the only thing that is required of us is to make it more accessible and convenient for society.

Instead of spending thousands of hours generating pathetic texts about which corporations are evil and how they manipulate the "poor people," we can devote a tenth of that time to create tools to raise awareness in society. We can provide these tools to small and medium businesses. We can make it so that the achievements of cognitive sciences become obligatory for the study of the largest number of specialists in different fields. We can "disarm" megacorporations, politicians, and anyone else by popularizing conscious work with manipulation and nudging technologies.

This is one of the reasons that drove me to publish UX Core in 2020.

3. Leveling the Odds

Going back to UX Core, of course, the feedback I received was not only overwhelmingly positive. Many visitors constantly had the same question: "How to use this?"

Going back to UX Core, of course, the feedback I received was not only overwhelmingly positive. Many visitors constantly had the same question: "How to use this?"

My typical dialogue with users about this looked like this:

User: How can we use UX Core? We would like to know which bias to take into account in our situation.

Me: I understand you, but there is more than one bias connected to any issue <...>

User: So be it. Which biases are relevant for our case?

Me: It's not that simple <...>

And indeed. People truly wanted to use UX Core more practically, but instead, they could only be content with descriptions and examples of biases. No filters. Weak structure. I could not categorize the biases in any way, as there were no "particularly important" or "more important" ones. Each bias can be fundamental in a particular situation. Moreover, in another situation, the same bias may be completely useless.

It is easier for major IT companies to use this knowledge. They have staff members, such as Tristan Harris, who are given a specific task to solve. They know the task. They know their goals and success criteria. This allows them to understand which biases to take into account and how exactly to use them.

I went through a lot of options on how to make UX Core more useful. I didn't want to make filters like "Useful biases for mobile applications" or "Useful biases for online stores." All that would have a too abstract look and miss a lot of important details.

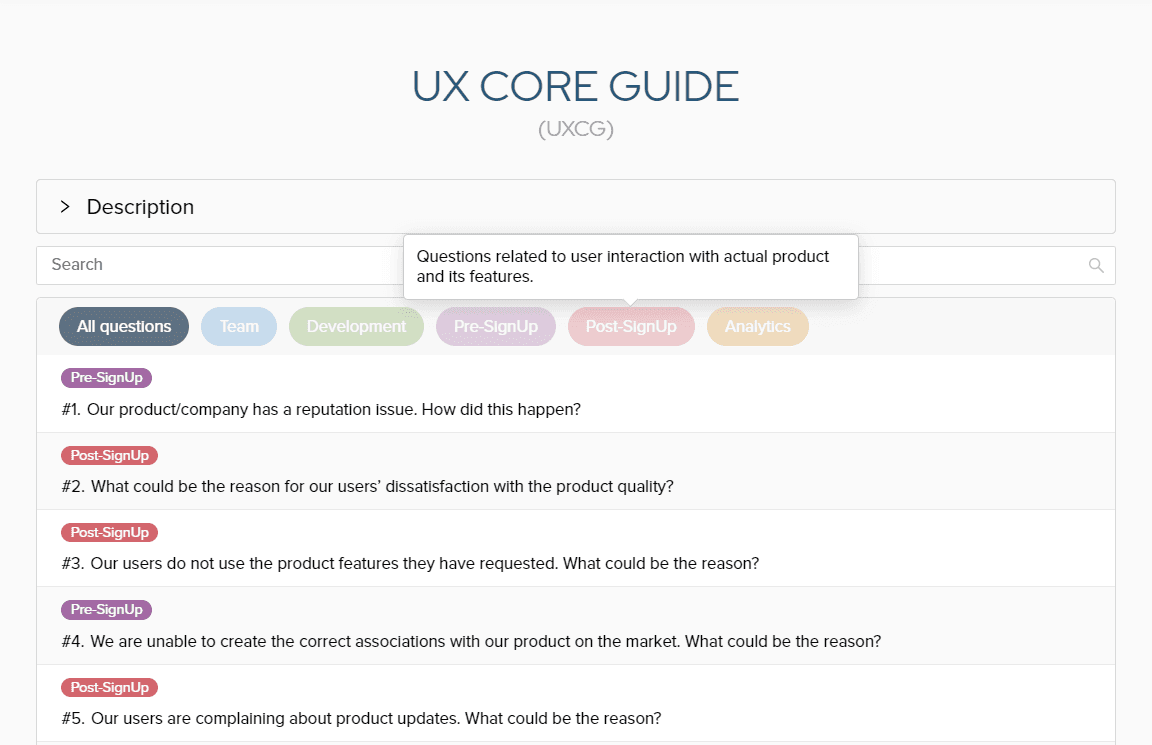

On December 23rd, 2020, I decided to create an add-on tool for UX Core called UX Core Guide (UXCG). The idea sounded like this: to create an equivalent of programming patterns (Software design patterns), which will help solve the most popular product management issues.

In programming, patterns are ready-made reusable solutions that solve a commonly occurring problem in software design. The interesting thing about patterns is that they cover fundamental questions without going into many accompanying details. This is what I wanted to achieve with UXCG.

As planned, the UXCG visitor should have seen the list of the most common questions that arise when working on a product. Then he had to choose a question that he considered relevant to himself and, in an opened window, see what cognitive biases answer his question. Keeping the context of his question in mind, the user had to check the answers with this context and understand which of them most clearly describe his problem.

The advantage of this approach is that the user receives around a dozen scientific vectors from which he can analyze the question.

The disadvantage of this approach: the user will have to think. Yes, the answers are specific, but they are specified within the framework of some abstraction, which means that the user will have to simulate the situation in his mind, taking into account the context of his question.

I have to admit I was worried that UXCG doesn’t provide comprehensive answers. But at the same time, I realized that for a perfectly accurate answer, you need to consider so many details that describing even one question would take an entire book. My doubts were more about the usefulness of this tool. I spent a whole week running every night wondering if UXCG would be useful.

Finally, two elements affected my decision to implement the project.

The first is Stackoverflow. In a sense, there were a few implemented ideological concepts that I wanted to implement in UXCG.

Let me explain. When a programmer opens Stackoverflow to find a solution to his question, he can open dozens of similar questions, analyze hundreds of answers, and, having seen all these points of view, come up with his own unique (!) solution to the problem. If he needs a solution to some complex issue, he will never find a ready-made piece of code ideal for copy-pasting into his project. He will need to adapt the found code for his system. Or, he may decide to write a solution from scratch, borrowing another programmer's approach to solving the issue.

In other words, programming also works with abstractions, although it is considered a more specific discipline than management, where tasks are often associated with user emotions.

The second element that convinced me to start this long journey is the versatility of what I do. All the described answers, where I consider certain issues from different angles using various cognitive biases, will be relevant for homo sapiens at least until the next cognitive revolution. And this will be when companies like Neuralink bring BMI (Brain-Machine Interfaces) systems to a level where it will be possible to easily find correlations in the patterns of our thoughts and changing emotional backgrounds. Thus, not soon.

4. User Experience Core Guide (UXCG)

On December 20th, 2020, I sketched out the project plan. In the beginning, I was counting the time it was taking to complete each stage. At some point, when the time spent on the project exceeded 300 hours, I stopped counting.

On December 20th, 2020, I sketched out the project plan. In the beginning, I was counting the time it was taking to complete each stage. At some point, when the time spent on the project exceeded 300 hours, I stopped counting.

I came up with 63 universal questions that occur in different forms and contexts in product management. Each of these questions, with proper extrapolation, makes it possible to present several dozen other questions.

For each question, I showed at least five viewing angles from the perspective of different cognitive biases. The idea was that even if the answer that the visitor read is not relevant for him, the very knowledge of such an angle existence allows him to prevent the described error in the future.

In total, I wrote 896 answers, having reread and edited all the content several dozen times.

The project was launched on July 16 - 174 days after the decision on its implementation was made (December 23rd, 2020), and almost a year after the release of UX Core (23rd of July).

Due to my inherent meticulousness, this project was the most difficult mental work I have ever done. I still can't imagine the benefits this project can bring because I have not seen anything similar in form and content. I just believe UXCG can help some people.

For those who want to look at the entire development process of this project and see my thoughts with each step, I wrote a separate article: UX Core Guide (geek content)

5. Project future

“You're living inside of hardware, a brain, that was, like, million years old, and then there's this screen, and then on the opposite side of the screen, there's these thousands of engineers and supercomputers that have goals that are different than your goals, and so, who's going to win in that game?”

©Tristan Harris – ex. design ethicist at Google

“You're living inside of hardware, a brain, that was, like, million years old, and then there's this screen, and then on the opposite side of the screen, there's these thousands of engineers and supercomputers that have goals that are different than your goals, and so, who's going to win in that game?”

©Tristan Harris – ex. design ethicist at Google

Wins the attentive one. In the second part of this article, I have already said that I do not consider the mechanisms for controlling the work of IT giants with a human subconscious and attention as the best solution to the issue. The most we can achieve with such an approach is to slow down their progress by letting their knowledge leak into smaller players' work manuals.

Let's look at the situation from the other side.

If Facebook, using its algorithms, offers me to buy something that I really like, I will buy it. Is Facebook guilty of knowing me better than myself? Nope.

Accusing IT giants of manipulation while ignoring the factor of people's personal responsibility for their consciousness is ... strange. From my perspective, it looks similar to the accusation of the car driver who accidentally hit a pedestrian who ran across the street in a prohibited place. What does the law say in such cases? The pedestrian has become a victim of his irresponsible attitude to the rules. Now let's imagine a person who bought an "unnecessary coffee machine" because Facebook pushed him to this. Is the company guilty? Should Facebook have used fewer nudging technologies? Should it ignore the scientific facts of how the brain works? Maybe they should have hired a design ethicist? :-).

Please note that I am not excusing the actions of megacorporations. They just do what anyone in their place would do. The strongest wins and the IT world is no exception. It's just that in IT, the strongest is the smarter one. And the smarter is the one who knows how to keep and direct user attention. IT companies do not share this power to defend their competitiveness - and this makes total sense.

In my turn, I invite all of us - ordinary people who understand the importance of knowledge about the brain's work, to share this knowledge with the world. I want to live in a world where people could recognize the nudging strategies used by all the companies around them. You can intuitively understand that everyone fights for your attention, or you can know exactly how that works and be alert.

How can we achieve this?

Share this article with your friends and colleagues. Share UX Core and UXCG. Each of you has your vision of making the world a better place, but instead of complaining about the proposed tools, share them until the world comes up with something better. That's all.

As for the further development of the project, I see four options, each fascinating in its way. Two of them affect the internal processes of the largest IT companies in Silicon Valley. I will not describe the options as I don't plan to implement them yet because of the massive amount of work needed. However, if among the readers there are people who want to help in the project development, here are some options on how to do it:

- translation of UXC and UXCG content into other languages and adding them to the project menu;

- creation of small videos/animations with short descriptions of the material presented in UXC / UXCG.

Ideally, one-minute video/animation for each bias in UXC and two minutes for each UXCG question; - creation of illustrations for each element of the project;

- publishing articles on your blogs;

- sharing this article and the UXC / UXCG projects among your friends and colleagues (short link: https://uxcg.io).

To take part/ ask questions/suggest something write me. I will be glad to help: alexanyanwolf@gmail.com / https://linkedin.com/in/alexanyan/

P.S. If you have done user behavior analysis and worked with cognitive biases in the companies mentioned in this article, please contact me on LinkedIn. We may have an exciting conversation :-)

Thanks,

Wolf Alexanyan

Wolf Alexanyan